I’ve experienced the confusion of AI in classrooms firsthand. In many of my classes, I have used AI responsibly, especially for brainstorming ideas or receiving quick study help. I often ask ChatGPT to give me practice problems to help me study for tests or learn new material. When I struggle with concepts, AI can act as a personalized tutor.

Yet, in one of my history classes, I was accused of wrongfully using AI on a paper, even though the assignment wasn’t clear on what counted as “too much.” The experience left me frustrated and worried: I wasn’t trying to cheat, but without clear school rules, there was no way for me to know how AI would be judged from class to class. Stories like mine aren’t unique. Many students have similar experiences, and until Poly makes a consistent school policy, situations like these will continue to occur.

California recently passed a state bill (SB1288) that will require the California Department of Education to establish a working group of students, teachers, administrators and experts in AI, aimed to create guidance and policies dealing with the use of AI in education. The state is recognizing that AI is changing education so rapidly that ignoring it isn’t an option. But this bill doesn’t just demand rules; it also emphasizes collaboration. By calling for working groups that include both educators and students, the state is acknowledging that any meaningful policy has to involve the people it impacts most.

However, students are the ones who are facing the consequences of unclear AI usage rules. Therefore, shouldn’t we also be part of creating the solution?

Artificial intelligence isn’t some far-off technology anymore; it’s already a part of students’ daily lives. From ChatGPT to Grammarly to the tools built into Google Docs like the “Help me write” icon, AI is showing up in how we study, write, and even solve simple daily problems. It’s changing how we learn, but while AI use is spreading, our school still doesn’t have a clear and consistent policy that tells students and teachers where the line is.

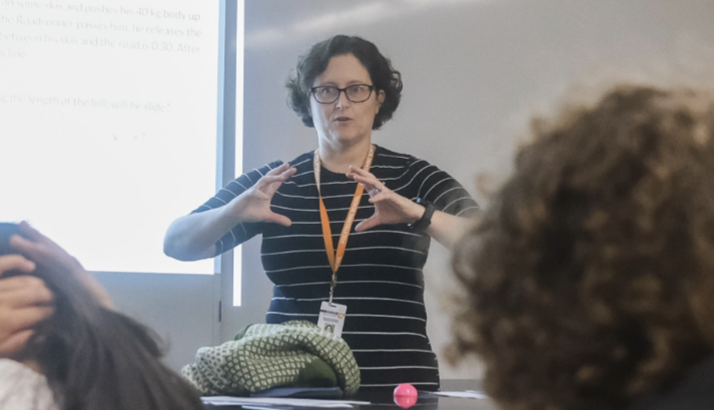

Right now, the use of AI is handled differently in each classroom at Poly. Some teachers allow it for brainstorming or studying, some encourage students to use it for revision and editing and others ban it outright. A few don’t mention it at all, which leaves students unsure when and how they are allowed to use it. Only the English department has created a clear, consistent AI policy, integrated into the syllabus.

This inconsistency creates real consequences for all the other departments that have yet to create such policies. Students don’t always know what’s “allowed,” and sometimes what is strongly encouraged in one class is punished in another. In one class, a student might think they can use AI to help polish an essay, while in another, they are told that even asking AI for help is considered plagiarism. Inconsistent enforcement leads to frustration and distrust, as rules shouldn’t change depending on the teacher in the classroom.

If Poly is going to establish AI rules, it needs to include students in the process. Students are the ones directly affected. We’re the ones using these tools daily, and without our perspective, policies risk being unrealistic or ignored.

It’s easy for adults to say “just don’t use it,” but the truth is that AI is already built into many of the tools we use. Even Microsoft Word and Google Docs have built-in AI assistance. A complete ban doesn’t solve the issue; it just pushes it underground and creates more confusion. If students don’t see themselves represented in the rules, they’re more likely to ignore them or feel resentment when they are enforced.

Instead, Poly should invite students to help build the policy. Imagine a committee of both teachers and students collaborating to write fair guidelines. Open forums could allow students to share their experiences, good and bad, with teachers and school administrators. Pilot programs could test different drafts of a policy in real classrooms before they’re finalized, so students could give feedback on what works and what doesn’t. Involving students isn’t just fair, it’s also practical. It creates rules that people will actually follow, and students in general would feel better represented in policy.

Our school has an opportunity to lead and as well as include outlets for diverse voices and opinions to be considered. If students, teachers and administrators work together, we can create AI rules that help prepare us for the future, instead of leaving us stuck in the past. Clear policies aren’t just about preventing problems; they’re about building equity, fairness and trust in an age where technology will only keep moving forward. At this moment, we must ask ourselves: will we fall behind, leaving students unprepared for a future in the age of AI? Or will we set the standard for responsible AI use?